As Generative AI (GenAI) transforms organizations across industries, education, and the public sector, the security implications have become a critical consideration for organizations of all sizes. At Tech Reformers, we’ve helped numerous clients navigate the complex landscape of AI implementation with AWS solutions. One particularly valuable resource we recommend is AWS’s Generative AI Security Scoping Matrix – a comprehensive framework that provides clarity in an otherwise complex security environment.

Understanding the AI Security Challenge

The rapid adoption of GenAI technologies presents unique security challenges that extend beyond traditional security measures. While core security disciplines like identity management, data protection, and application security remain essential, GenAI introduces distinct risks that require specialized consideration.

Organizations often struggle to determine exactly what security measures they need based on how they’re using AI. This is where AWS’s Generative AI Security Scoping Matrix shines – it offers a structured approach to understanding and implementing appropriate security controls based on your specific AI implementation.

The Five Scopes of AI Implementation

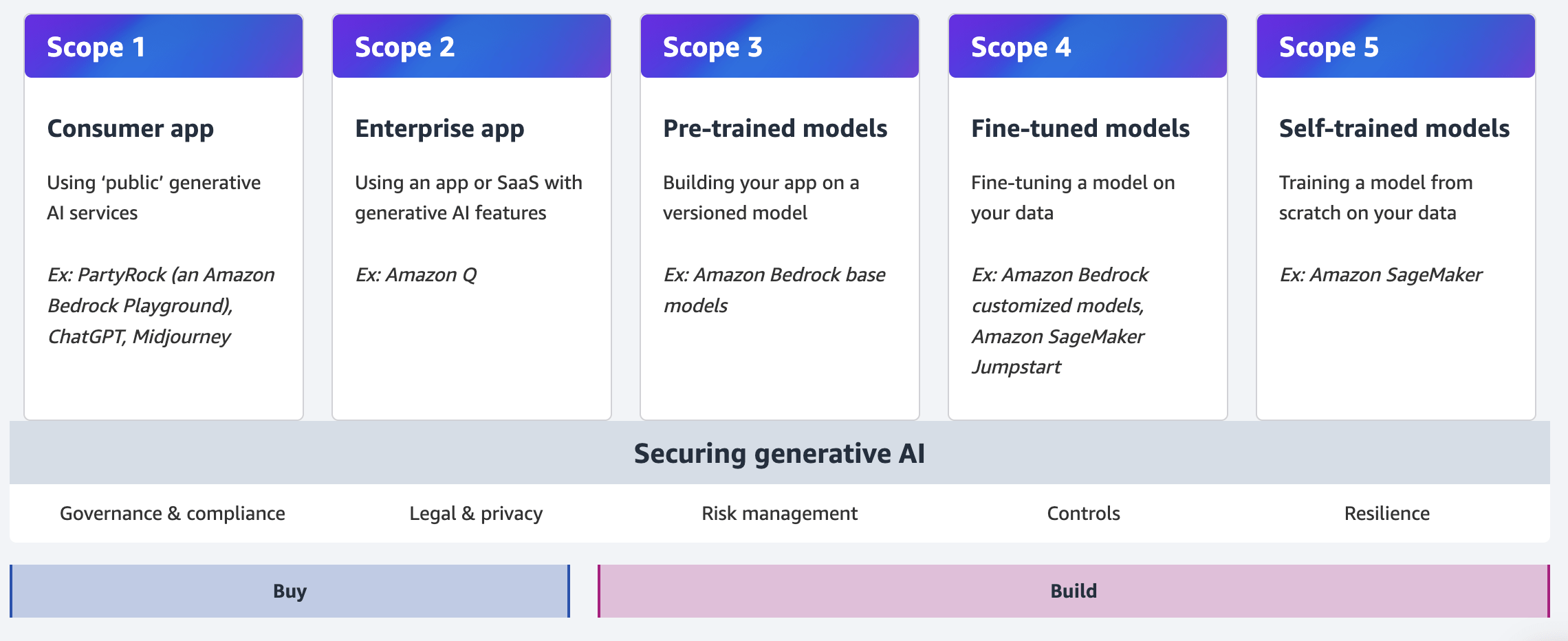

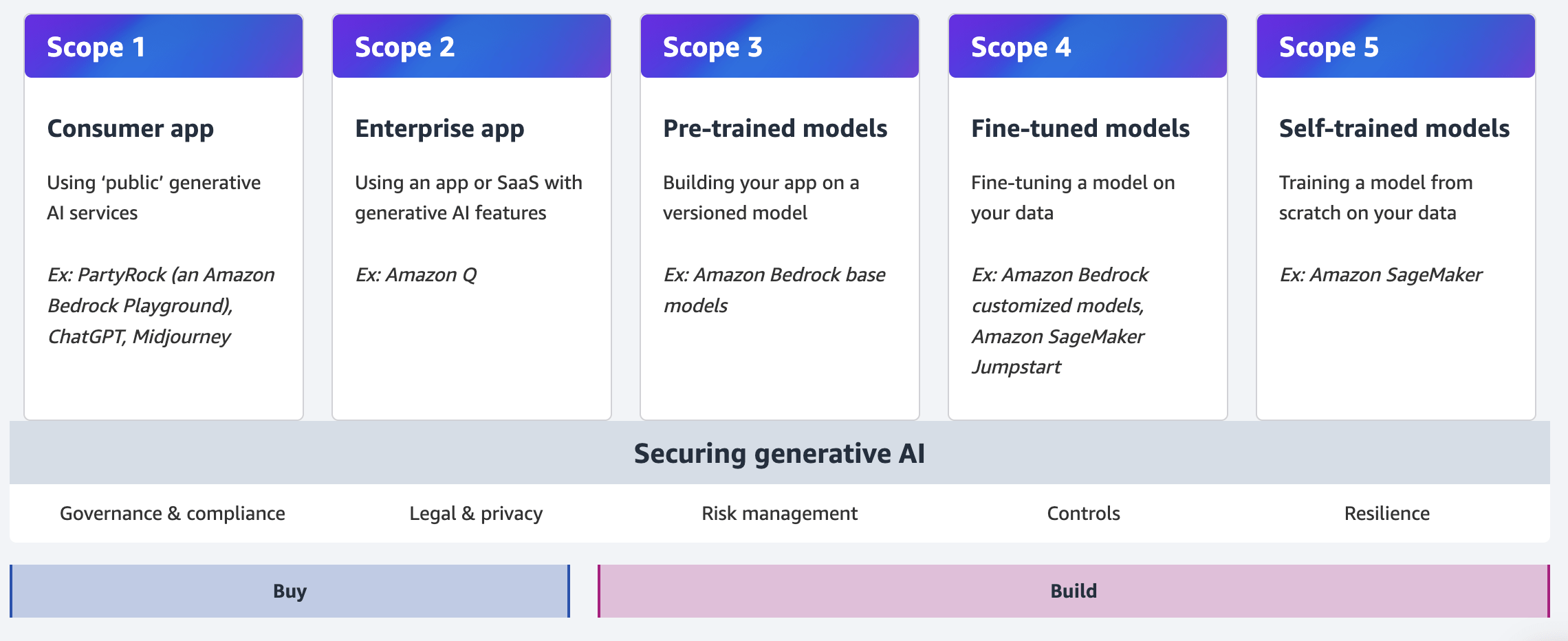

The genius of AWS’s framework lies in its simplicity. It classifies GenAI implementations into five distinct scopes, representing increasing levels of ownership and control over the AI models and associated data.

Scope 1: Consumer Applications

At this level, your organization simply consumes public third-party generative AI services. Think of employees using applications like ChatGPT or Amazon’s PartyRock to generate ideas or content. You don’t own or see the training data or model, and you can’t modify them.

For example, a marketing team member might use a public AI chat application to brainstorm campaign ideas or generate draft copy. The security focus here is primarily on usage governance and data sharing policies.

Scope 2: Enterprise Applications

This scope involves using third-party enterprise applications with embedded generative AI features. Unlike consumer apps, these typically offer business relationships with vendors and enterprise-grade terms and conditions.

A common example is using enterprise productivity tools that incorporate AI features for meeting scheduling, email drafting, or document summarization. Security considerations expand to include vendor assessment and data handling agreements.

Amazon Q is the most capable generative AI–powered assistant for leveraging organizations’ internal data and accelerating software development.

Scope 3: Pre-trained Models

Moving into more technical implementation, Scope 3 involves building your own applications using existing third-party foundation models through APIs. You’re not modifying the model itself, but you are creating custom integrations.

Many of our clients operate at this level, building custom applications that leverage foundation models like Anthropic Claude through Amazon Bedrock APIs. These implementations often involve techniques like Retrieval-Augmented Generation (RAG) to enhance models with organization-specific information without changing the model itself.

Scope 4: Fine-tuned Models

At this scope, organizations take existing foundation models and fine-tune them with specific business data. This creates specialized versions of models tailored to particular domains or tasks.

For instance, a healthcare client might fine-tune a foundation model with medical terminology and documentation standards to improve summarization of patient records in an EHR system. Security considerations now extend to the training data and the resulting specialized model.

Scope 5: Self-trained Models

The most comprehensive level involves building and training generative AI models from scratch using organizational data. This offers maximum control but also requires the most extensive security considerations.

An example might be creating a custom model for specialized video content generation in the media industry. At this level, organizations own every aspect of the model development and deployment.

Security Priorities Across the AI Lifecycle

AWS’s matrix identifies five key security disciplines that span these different implementation types:

Governance and Compliance

As you move from consumer applications to self-trained models, governance requirements increase significantly. For Scopes 1 and 2, focus on terms of service compliance and establishing clear usage policies. For higher scopes, comprehensive governance frameworks become essential, including model development standards and continuous monitoring processes.

Legal and Privacy Considerations

Different regulatory requirements apply based on your implementation scope. Consumer applications require careful attention to data submission practices, while enterprise models demand robust contractual protections. For self-trained models, compliance with relevant data privacy laws becomes paramount, particularly when handling sensitive information.

Risk Management Strategies

The matrix helps identify potential threats specific to each implementation type. For instance, in Scope 3 implementations, organizations should focus on prompt injection vulnerabilities and API security. For Scopes 4 and 5, data poisoning and model extraction attacks become more significant concerns.

Security Controls Implementation

Practical security measures vary across scopes. Lower scopes emphasize access controls and data handling policies, while higher scopes require technical safeguards like training data validation, adversarial testing, and model output filtering.

Resilience Planning

Building resilient AI systems means addressing availability requirements and business continuity considerations. For mission-critical AI applications, this might include redundancy planning, fallback mechanisms, and continuous monitoring solutions.

Practical Application for Your Organization

Based on our work with clients across industries, we recommend a phased approach to implementing AWS’s security framework:

- Accurately scope your current and planned AI implementations – Most organizations operate across multiple scopes simultaneously, so mapping your activities to the matrix is an essential first step.

- Prioritize security disciplines based on your specific risk profile – Not all security considerations are equally important for every organization. Identify your most critical concerns based on your industry, data sensitivity, and regulatory environment.

- Implement appropriate controls progressively – Start with fundamental security measures and expand your security program as your AI implementations mature.

- Continuously reassess as your AI strategy evolves – The rapid advancement of AI capabilities means your security approach must be flexible and regularly updated.

Moving Forward with Confidence

The AWS Generative AI Security Scoping Matrix provides a valuable mental model for organizations navigating the complex intersection of innovation and security. By understanding where your implementations fall within this framework, you can apply appropriate security measures without unnecessarily constraining your ability to realize AI’s transformative potential.

At Tech Reformers, we’re helping organizations leverage this framework to build secure, effective AI strategies on AWS. The matrix provides clarity without oversimplification – acknowledging that different AI implementations require distinct security approaches.

To explore the complete Generative AI Security Scoping Matrix and learn more about AWS’s comprehensive approach to AI security, read more at the official AWS resource.

Need help implementing these security principles in your organization? Contact Tech Reformers today to discuss how we can help you build a secure, effective generative AI strategy on AWS.